# Import libraries

import numpy as np

import pandas as pd

import pandas as pd

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor

from sklearn.model_selection import train_test_split, RandomizedSearchCV, cross_val_score, GridSearchCV

from sklearn.metrics import mean_squared_error, mean_absolute_error, r2_score

import xgboost as xgb

from xgboost import XGBRegressor

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.feature_selection import RFE

from sklearn.linear_model import LinearRegression

from sklearn.base import BaseEstimator, RegressorMixin

from sklearn.svm import SVR

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import make_pipeline

import warnings

def fxn():

warnings.warn("deprecated", DeprecationWarning)

with warnings.catch_warnings():

warnings.simplefilter("ignore")

fxn()Intro

This blog post is reflecting on the last assignment done in EDS232 of the Bren MEDS program. We were given data from CalCOFI, an orgainzation that has existed over 70 years and conducts important oceanographic research. In this blog post, I will flesh out some of the work done in the assignment and add context around the importance of dissolved inorganic compounds in climate conservation efforts.

What’s DIC?

Dissolved inorganic carbon (DIC) plays a critical role in the ocean’s carbon cycle and has significant implications on the health of marine life, in addition to more general climate change impacts. Image this: instead of being fluid, the ocean is a massive sponge that soaks up carbon dioxide from the atmosphere. Over time, the carbon dioxide dissolves into the water and transforms into different kinds of dissolved inorganic carbon, like carbonic acid and bicarbonate (I won’t cover the chemistry of this transition, but you can learn more about that at [@ScienceDirect]). These forms of DIC are kept stable by the pH levels of the ocean (roughly 8.1). Now imagine the sponge again, already full, trying to squeeze in more carbon dioxide. This is where things are at now: more carbon dioxide gets stuffed into the atmosphere by burning fossil fuels and cutting down forests, which is causing the oceans to absord more carbon dioxide than they are ready to handle. Despite this, oceans will continue to soak in carbon dioxide. As a result, the amount of carbon dioxide absorbed by the ocean leads to a process called ocean acidification. The greater carbon dioxide absorption causes a shift in the delicate balance of DIC in the ocean, leading to a decrease in the ocean’s pH level. Marine life, and everything that relies on it, are and will be the first to pay the price of this devastating effect, and ripples of the acidification process will be felt in every corner of the planet.

Ocean acidification can have serious consequences on marine organisms that rely on calcification to build their skeletons and shells, such as coral, plankton, crabs, lobsters, and more. Coral reefs are home to an abundance of life. Often referred to as the rainforests of the sea, coral reefs cover less than one percent of the ocean floor but support about 25 percent of all known marine species. As the ocean gets more acidic (when the pH lowers), there is a lower supply of carbonate ions which in turns makes it challening for these organisms to build their essential body structures. This reminds me of a situation where the half of the wood on Earth gets infested with something and is unusable, causing the construction industry to go down, then all goods-producing industries, then subsequently every other industry in the global economy to just crash and burn. Coral reefs have it even worse than the shellfish since they face the impact of reduced calcification rates and higher rates of dissolution of their calcium carbonate structures (or backbone). To make maters worse, rising ocean temperatures and deviations in typical oceanographic patterns can impact the spread and cycle of DIC across the ocean. Warmer waters aren’t able to absorb as much carbon dioxide, so as the ocean rises it is likely going to be the case that less carbon is actually drawn from the atmosphere and absorbed by the ocean. Double whammie! Ocean acidification has extreme consequences, and it is our obligation to limit it as best we can.

Here is what can be done: reduce our carbon emissions and restore coastal ecosystems, and we could be in luck!

Importance of Predicting DIC

Using ML

If we want to tackle the challenges posed by ocean acidification and climate change, we need to be able to predict DIC levels accurately. This is where machine learning comes in to play.

By harnessing the power of data and algorithms, we can develop models that help us assess the extent of ocean acidification across different locations and time scales. This is critical for understanding the potential impacts on marine ecosystems and identifying the areas that are most vulnerable. Predicting DIC levels also enables us to establish specific, tailored, and powerful mitigation strategies. By anticipating future changes in DIC and their consequences, we can make informed, (hopefully) accurate decisions about how to allocate resources, prioritize conservation efforts, and adapt management approaches. Sounds wonderful, and even feasible?

In this analysis, my goal is to build a predictive model for DIC using machine learning approaches. I found that XGBoost, a powerful algorithm known for its ability to handle complex relationships and deliver accurate predictions, was the best performer on the data set we were given. I tried a few Random Forest methods, but did not get quite the same results as from XGBoost. By training the model on a dataset of various oceanographic parameters, such as temperature, salinity, and nutrient concentrations, the goal was to capture and understand the underlying patterns and relationships that influence DIC levels. I also dove deep into the data, exploring the distributions, correlations, and potential multicollinearity among the variables. This let me gain (and now you, the reader) a more holistic understanding of the factors at play.

Equity Consequences

The impacts of elevated DIC levels on marine ecosystems extend far beyond the realm of environmental conservation. They have profound socio-economic implications that ring through coastal communities and beyond. Many of these communities rely heavily on the health and productivity of marine environments for their livelihoods, and the degradation of these ecosystems can have severe consequences.

Consider the coral reefs again, which are particularly vulnerable to ocean acidification. These underwater sanctuaries are not only beautiful but also serve as the foundation for thriving ecosystems that support an incredible diversity of life. Coral reefs attract tourists from around the world, generating significant revenue for local economies through activities such as snorkeling, diving, and beach tourism. However, as DIC levels rise and the ocean becomes more acidic, the delicate balance that sustains these reefs is disrupted. The deterioration of coral reefs can lead to a landslide of ecological effects, culminating in the loss of biodiversity and the decline of the tourism industry that relies on their allure.

Moreover, the socio-economic consequences of elevated DIC levels extend to the realm of fisheries. Lots of coastal communities rely on fishing as a primary source of both sustenance and income. As ocean acidification alters the chemistry of precious waters, it can have detrimental effects on the growth, survival, and reproduction of many marine species. The destruction of fish populations and altered species distributions can have severe implications for the livelihoods of fishermen and the communities they support. The loss of income and food security can ripple through these societies, exacerbating poverty and social instability. For many coastal communities, the ocean is not merely a resource to be exploited but an integral part of their cultural heritage and identity. The degradation of marine environments can erode the very fabric of these societies, undermining traditional practices, knowledge systems, and social structures that have evolved in harmony with the sea. The loss of cultural heritage and the severing of deep-rooted connections to the ocean can have profound psychological and emotional consequences for these communities.

Tools and Techniques

I worked in Python and used its ecosystems of data science libraries, such as pandas, matplotlib, and scikit-learn. Utilizing techniques like cross-validation and hyperparameter tuning, I strove to build a sturdy and generalizable pipeline that serves as an example of how data science can help curb the effects of the climate crisis.

Data Exploration and Preprocessing

First, the data is read in and quickly cleaned up.

# Read in data

data = pd.read_csv("data/training.csv")

test_data = pd.read_csv('data/test.csv')

# Remove the NA values

data = data.drop('Unnamed: 12', axis=1)

# Rename columns to match test data

data = data.rename(columns={'TA1.x': 'TA1'})data.columnsIndex(['id', 'Lat_Dec', 'Lon_Dec', 'NO2uM', 'NO3uM', 'NH3uM', 'R_TEMP',

'R_Depth', 'R_Sal', 'R_DYNHT', 'R_Nuts', 'R_Oxy_micromol.Kg', 'PO4uM',

'SiO3uM', 'TA1', 'Salinity1', 'Temperature_degC', 'DIC'],

dtype='object')Looking at the columns, this dataset encompasses a wide array of oceanographic variables that can potentially influence dissolved inorganic carbon (DIC) levels in the ocean. Key features include latitude and longitude (Lat_Dec, Lon_Dec), which provide spatial context and can capture regional variations in DIC. Nutrient concentrations such as nitrite (NO2uM), nitrate (NO3uM), ammonia (NH3uM), phosphate (PO4uM), and silicate (SiO3uM) are essential for marine primary production and can affect the biological pump that influences DIC levels. Physical parameters like temperature (R_TEMP, Temperature_degC), depth (R_Depth), salinity (R_Sal, Salinity1), and dynamic height (R_DYNHT) play crucial roles in determining the solubility and distribution of DIC in the water column. Dissolved oxygen (R_Oxy_micromol.Kg) and total alkalinity (TA1) are closely linked to the carbonate system and can provide insights into the carbonate chemistry that regulates DIC concentrations.

# Distribution of DIC in data

plt.figure(figsize=(8, 6))

plt.hist(data['DIC'], bins=20)

plt.xlabel('DIC')

plt.ylabel('Frequency')

plt.title('Distribution of DIC')

plt.show()

The distribution of DIC is somewhat bimodal, with beaks around 2000 and 2250.

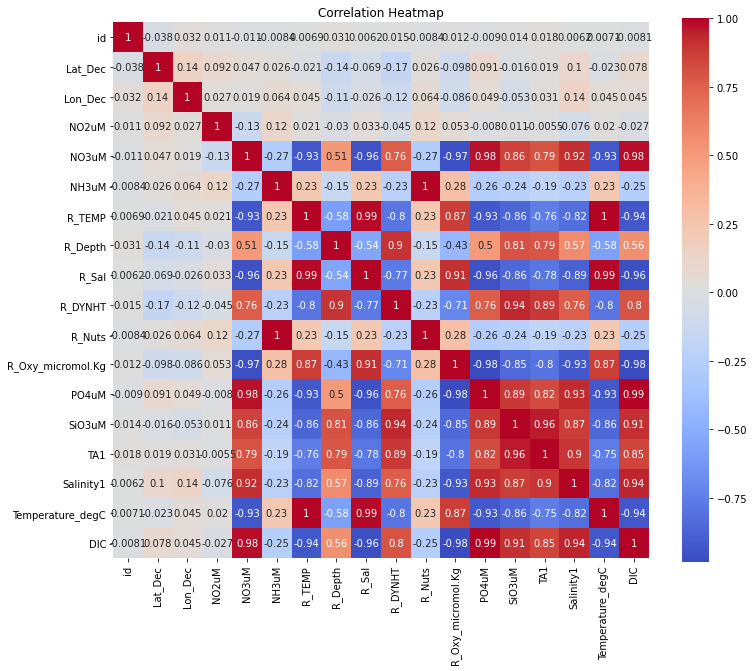

Next, let’s look at a correlation heat map to better understand the features in the data and how they relate to one another.

# Correlation heatmap

plt.figure(figsize=(12, 10))

sns.heatmap(data.corr(), annot=True, cmap='coolwarm', square=True)

plt.title('Correlation Heatmap')

plt.show()

A quick glance at the heat map reveals that there are some strong relationships working in the data across the different features. While some are obviosuly 1 (like temperature in farenheit and celcius), closely examining the heatmap gives insights into how the features are related to each other, and points to the idea that it would be benefitial to look into the issue over multicollinearity this data. Multicollinearity arises when two or more features are highly correlated with each other, and it can lead to unreliable coefficient esimates and make the impact of individual features difficult. In this case, temperature in degrees farenheit and celcius are going to be identical and having one or the other will not change the predictions under random forest operators that we will be implementing. The issue of multicollinearity in this context can be skipped over since we are not as much concerened about interpreting each individual variable’s coefficients, but rather the predictive power of a random forest model (or some other similar kind of ML algorithm).

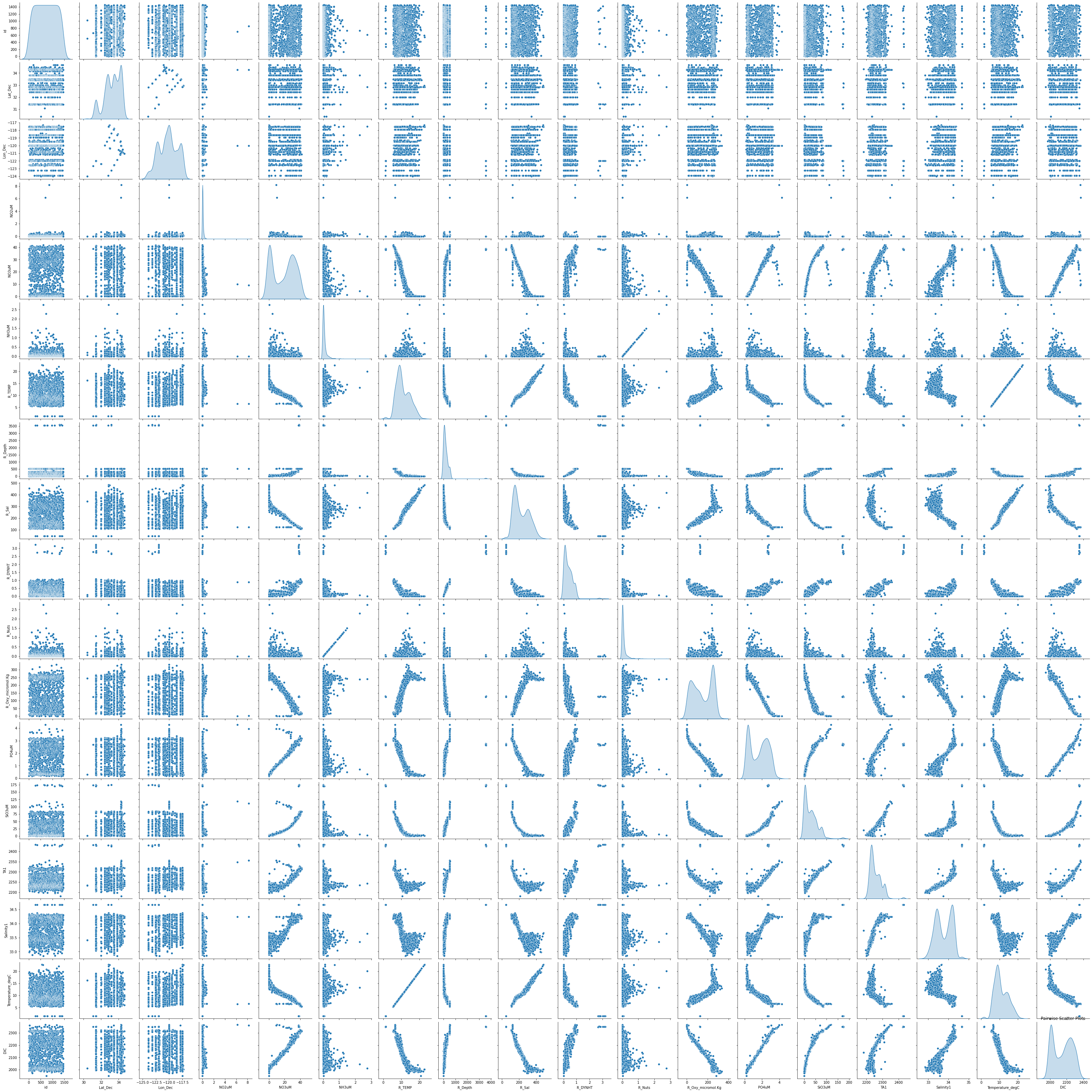

plt.figure(figsize=(12, 10))

sns.pairplot(data, diag_kind='kde')

plt.title('Pairwise Scatter Plots')

plt.show()<Figure size 864x720 with 0 Axes>

A gem of a chart. This shows the distribution of each variable against each other one, and then the distribution of each variable along the axis. This chart is very helpful for identifying redundant features and understanding the distribution of each column to check if normalizing the data would be a productive strategy.

Now, I’ll go ahead and split the data into training and testing sets, choosing an 80/20 split.

# Remove highly correlated features from the training data

columns_to_remove = ['Temperature_degC', 'Salinity1', 'R_Nuts', 'R_Sal', 'R_DYNHT']

X = data.drop(['DIC'] + columns_to_remove, axis=1)

y = data['DIC']

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20, random_state=42)

print(f'X_train : {X_train.shape}')

print(f'y_train : {y_train.shape}')

print(f'X_test : {X_test.shape}')

print(f'y_test : {y_test.shape}')X_train : (1163, 12)

y_train : (1163,)

X_test : (291, 12)

y_test : (291,)Model Selection and Training

I started by choosing Random Forest as my model of choice to predict DIC levels, but I found that XGBoost performs stronger on the training and testing data so I opted for that one instead.

I tried using both grid search and randomized search, and found that they perform similarly in terms of RMSE. However, grid search takes a lot longer to train and so I opted for randomized search in my final approach.

from sklearn.ensemble import RandomForestRegressor, AdaBoostRegressor

from sklearn.model_selection import GridSearchCV, RandomizedSearchCV

# Define the parameter grid for random forest

rf_param_grid = {

'n_estimators': [100, 200, 300],

'max_depth': [3, 5, 7, 9],

'min_samples_split': [2, 5, 10, 15],

'min_samples_leaf': [1, 2, 4, 8],

'max_features': ['sqrt', 'log2']

}

# Define the parameter grid for AdaBoost

ada_param_grid = {

'n_estimators': [100, 200, 300],

'learning_rate': [0.01, 0.03, 0.05, 0.1]

}

# Create the base random forest model

rf_model = RandomForestRegressor(random_state=42)

# Perform grid search for random forest

rf_grid_search = RandomizedSearchCV(estimator=rf_model, param_distributions=rf_param_grid, cv=10, scoring='neg_mean_squared_error', n_jobs=-1)

rf_grid_search.fit(X_train, y_train)

# Get the best random forest model

best_rf_model = rf_grid_search.best_estimator_

# Create the AdaBoost regressor with the best random forest as the base estimator

ada_model = AdaBoostRegressor(estimator=best_rf_model, random_state=42)

# Perform grid search for AdaBoost

ada_grid_search = RandomizedSearchCV(estimator=ada_model, param_distributions=ada_param_grid, cv=10, scoring='neg_mean_squared_error', n_jobs=-1)

ada_grid_search.fit(X_train, y_train)

# Get the best boosted random forest model

best_boosted_rf_model = ada_grid_search.best_estimator_

# Predict on the test set using the best boosted random forest model

predictions = best_boosted_rf_model.predict(X_test)

# Evaluate the model

mse = mean_squared_error(y_test, predictions)

print("Root Mean Squared Error:", np.sqrt(mse))

# Prepare the actual test data features

test_data_features = test_data[X.columns]

# Make predictions on the actual test data using the best boosted random forest model

predictions_actual_test_data = best_boosted_rf_model.predict(test_data_features)

submission_df = pd.DataFrame({

'id': test_data['id'],

'DIC': predictions_actual_test_data

})

submission_csv_path = 'data/final_submission_boosted_rf_tuned_regularized.csv'

submission_df.to_csv(submission_csv_path, index=False)/Users/maxwellpatterson/opt/anaconda3/lib/python3.8/site-packages/joblib/externals/loky/process_executor.py:752: UserWarning: A worker stopped while some jobs were given to the executor. This can be caused by a too short worker timeout or by a memory leak.

warnings.warn(Root Mean Squared Error: 5.960879188324126In this code chunk below, I leverage the XGBoost algorithm to build an ensemble of regression models for predicting dissolved inorganic carbon (DIC) levels. By employing random search and cross-validation, I fine-tune each XGBoost model with optimal hyperparameters. The resulting ensemble combines the strengths of multiple models, enabling accurate predictions that can aid in understanding ocean acidification

'''

# XGBoost

from sklearn.model_selection import RandomizedSearchCV

from sklearn.ensemble import VotingRegressor

param_distributions = {

'learning_rate': [0.01, 0.05, 0.1, 0.2, 0.3],

'n_estimators': [50, 100, 200, 300, 400, 500],

'max_depth': [2, 3, 4, 5, 6, 7, 8],

'subsample': [0.6, 0.7, 0.8, 0.9, 1.0],

'colsample_bytree': [0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1.0],

'alpha': [0, 0.1, 0.5, 1, 2], # L1 regularization

'lambda': [0, 0.1, 0.5, 1, 2], # L2 regularization

'objective': ['reg:squarederror'],

'eval_metric': ['rmse']

}

# Create a list to store the individual XGBoost models

models = []

# Number of models in the ensemble

n_models = 5

# Perform random search with cross-validation for each model

for i in range(n_models):

# Create the XGBoost model

xgb_model = xgb.XGBRegressor(random_state=42+i, objective='reg:squarederror', eval_metric='rmse')

# Perform random search

random_search = RandomizedSearchCV(estimator=xgb_model, param_distributions=param_distributions,

n_iter=50, cv=5, scoring='neg_mean_squared_error', verbose=1, random_state=42+i)

random_search.fit(X_train, y_train)

# Get the best model and add it to the list of models

best_model = random_search.best_estimator_

models.append(best_model)

print(f"Best Parameters for Model {i+1}:", random_search.best_params_)

# Create the voting regressor

ensemble = VotingRegressor(estimators=[('model'+str(i), model) for i, model in enumerate(models)])

# Fit the ensemble on the training data

ensemble.fit(X_train, y_train)

# Get the best model and its parameters

best_model = random_search.best_estimator_

best_params = random_search.best_params_

print("Best Parameters:", best_params)

# Make predictions using the best model on the training set

y_train_pred = best_model.predict(X_train)

# Calculate the training RMSE

train_rmse = np.sqrt(mean_squared_error(y_train, y_train_pred))

print("RMSE on the training set:", train_rmse)

# Make predictions using the best model on the test set

y_test_pred = best_model.predict(X_test)

# Calculate the test RMSE

test_rmse = np.sqrt(mean_squared_error(y_test, y_test_pred))

print("RMSE on the test set:", test_rmse)

'''Fitting 5 folds for each of 50 candidates, totalling 250 fits

Best Parameters for Model 1: {'subsample': 0.8, 'objective': 'reg:squarederror', 'n_estimators': 300, 'max_depth': 7, 'learning_rate': 0.05, 'lambda': 0.5, 'eval_metric': 'rmse', 'colsample_bytree': 0.8, 'alpha': 0.5}

Fitting 5 folds for each of 50 candidates, totalling 250 fits

Best Parameters for Model 2: {'subsample': 0.6, 'objective': 'reg:squarederror', 'n_estimators': 200, 'max_depth': 4, 'learning_rate': 0.05, 'lambda': 1, 'eval_metric': 'rmse', 'colsample_bytree': 0.8, 'alpha': 1}

Fitting 5 folds for each of 50 candidates, totalling 250 fits

Best Parameters for Model 3: {'subsample': 0.9, 'objective': 'reg:squarederror', 'n_estimators': 500, 'max_depth': 8, 'learning_rate': 0.05, 'lambda': 0.5, 'eval_metric': 'rmse', 'colsample_bytree': 1.0, 'alpha': 2}

Fitting 5 folds for each of 50 candidates, totalling 250 fits

Best Parameters for Model 4: {'subsample': 1.0, 'objective': 'reg:squarederror', 'n_estimators': 300, 'max_depth': 4, 'learning_rate': 0.05, 'lambda': 2, 'eval_metric': 'rmse', 'colsample_bytree': 0.6, 'alpha': 0.1}

Fitting 5 folds for each of 50 candidates, totalling 250 fits

Best Parameters for Model 5: {'subsample': 0.6, 'objective': 'reg:squarederror', 'n_estimators': 300, 'max_depth': 5, 'learning_rate': 0.05, 'lambda': 2, 'eval_metric': 'rmse', 'colsample_bytree': 0.7, 'alpha': 2}

Best Parameters: {'subsample': 0.6, 'objective': 'reg:squarederror', 'n_estimators': 300, 'max_depth': 5, 'learning_rate': 0.05, 'lambda': 2, 'eval_metric': 'rmse', 'colsample_bytree': 0.7, 'alpha': 2}

RMSE on the training set: 2.1782897894791775

RMSE on the test set: 5.692170324604025The XGBoost model shows a small improvement in RMSE from 5.937 to 5.692. I believe that implementing an ensemble method of averaging across different methods like XGBoost and Bagging would lead to the best submission for the Kaggle competition that this assignment was for!

Model Evaluation and Interpretation

The obtained root mean squared error of 5.69 on the test set indicates that, on average, the predictions made by the best XGBoost model deviate from the actual values by approximately 5.69 units. This indicates solid predictive power of our model.

The XGBoost model with random search and cross-validation provides a solid foundation for predicting the target variable, but it is important to acknowledge the limitations and potential areas for improvement. One key aspect is the limited hyperparameter search space. While the random search explores a predefined set of hyperparameter values, it may not exhaustively cover all possible combinations that could lead to better performance. Additionally, the stochastic nature of random search means that it randomly samples hyperparameter configurations, which may not guarantee finding the optimal set of parameters. Repeating the search with different random states or increasing the number of iterations could help mitigate this limitation. Another concern is the risk of overfitting, especially given the lower training RMSE. This suggests that the model may be too complex and is fitting the noise in the training data, potentially leading to poor generalization on unseen data. To address this, techniques like regularization or early stopping could be employed. Furthermore, the model’s performance could be improved by obtaining more diverse samples from different areas, as this would provide a more representative dataset for training and evaluation. By expanding the data collection efforts and incorporating samples from various regions, the model’s ability to capture the underlying patterns and generalize well to new data could be enhanced.

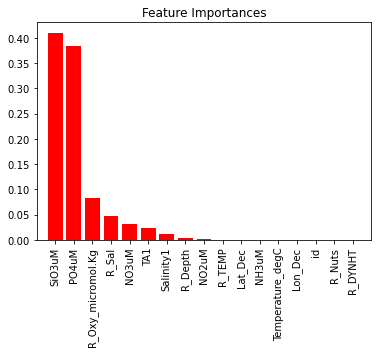

# Feature Importance

xgb_model = xgb.XGBRegressor(objective='reg:squarederror', **best_params)

xgb_model.fit(X_train, y_train)

feature_importances = xgb_model.feature_importances_

indices = np.argsort(feature_importances)[::-1]

plt.figure()

plt.title("Feature Importances")

plt.bar(range(X_train.shape[1]), feature_importances[indices],

color="r", align="center")

plt.xticks(range(X_train.shape[1]), X_train.columns[indices], rotation=90)

plt.xlim([-1, X_train.shape[1]])

plt.show()

This plot shows that trioxidosilicate (SiO3) and phosphate (PO4) are far and away the most important features in determining DIC values.

Implications and Future Work

This example of machine learning model could be effective when resources are limited to get samples of water and extract DIC content. Dissolved inorganic carbon amounts could be predicted by getting samples from a few locations and then using spatial interpolation to estimate the distribution across the entire region. This could save valuable time and resources and make results easier to obtain, which could be used in the fight to protect marine life and ocean ecosystems.